After years of training hundreds of models and building AI solutions, I kept running into the same fundamental issues. AI won’t get better through larger models alone. To become truly useful, we need to find a way to leverage their strengths as part of a new kind of computer system.

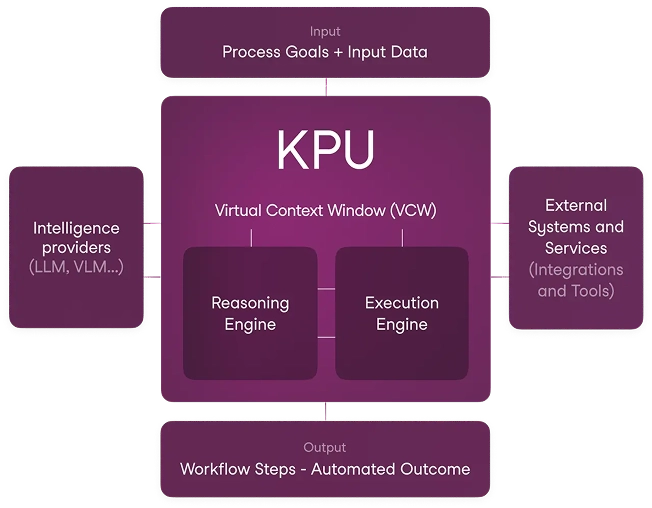

That’s why we built the KPU—Maisa’s AI Computer

Manuel Romero, Co-Founder & CSO at Maisa

Escrito por: Manuel Romero Publicado: 05/03/2025

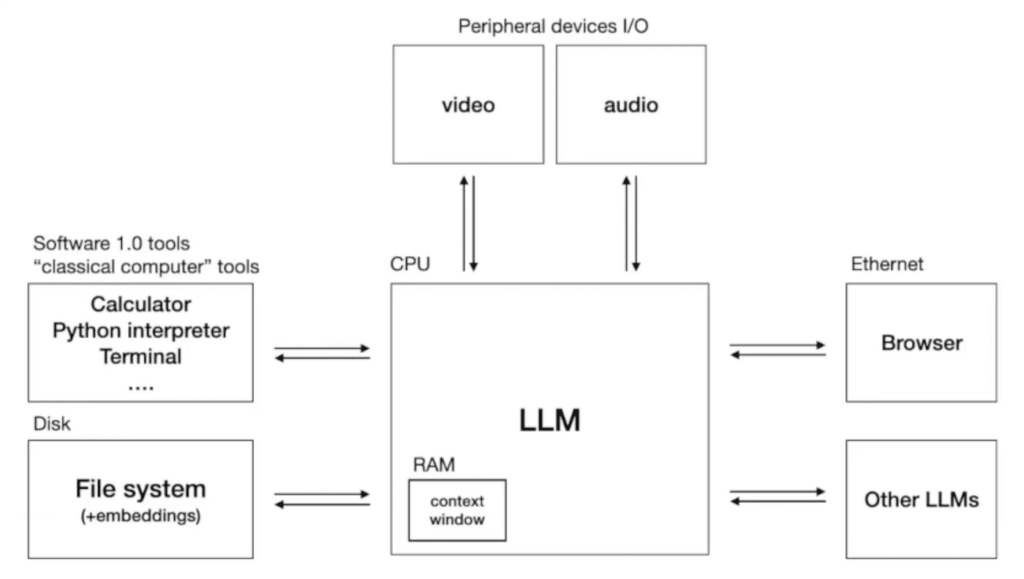

Andrej Karpathy’s LLM OS concept: envisioning large language models as the core of future operating systems

Throughout history, humans have developed computational systems, formal logic, and scientific methods to verify our thinking. Can we do the same for AI?

All technological revolutions required us to think outside the box, reimagine how we used to do things, and design new architectures and systems.

AI is no different.

We are now familiar with the power of AI, but every AI-native system lives in a chat-based interface. An instruction fine-tuned LLM so we can have conversations with this technology and help us through a wide array of tasks.

However, in this configuration, we see some interesting add-ons. With ChatGPT, the most well-known AI-native system (a chatbot), the LLM uses some tools depending on the task: internet search, code executor, dalle for image generation, internal memory storage, and retrieval.

Here we see an initial approach to the next computing paradigm, one where AI is not an assistant that lives in a chatbot interface but an orchestrator.

Where is AI headed? how can we leverage the intelligence of this technology to reach another level?

Looking at LLMs as chatbots is the same as looking at early computers as calculators. We’re seeing an emergence of a whole new computing paradigm.

Andrej Karpathy

Before operating systems, using a computer meant manually loading programs, writing commands in raw machine code or punch cards, and managing memory and processing power directly. Users had to allocate resources, track execution, and reload programs for each task. This made computing inefficient, time-consuming, and inaccessible to anyone without specialized technical expertise.

Operating systems transformed computing by automating memory allocation, process management, and data storage. This software layer creates abstraction between users and hardware, eliminating the need to understand technical peculiarities. By allowing people to interact with computers at a higher level, operating systems made computing accessible and laid the foundation for personal technology, letting users focus on their tasks rather than the underlying mechanics.

At the core of every operating system is the kernel, the component that coordinates processes and manages system resources. The AI Computer takes this further by making AI the kernel—the technology that orchestrates tasks, tools, and processes autonomously. Instead of merely executing predefined instructions, it becomes agentic, meaning it can independently make decisions and take actions to achieve a goal.

Traditional computers operate on static, predefined logic—they execute commands exactly as programmed, following a structured set of rules to process user inputs. Every task requires a sequence of actions—clicks, keystrokes, and manual navigation—where the OS provides abstraction layers to help users manage files, applications, and processes more efficiently. However, the responsibility of executing tasks still lies entirely with the user.

The AI Computer shifts this paradigm by embedding intelligence at its core. Instead of merely processing user commands, it understands objectives, interprets intent, and autonomously orchestrates the necessary steps to achieve the desired outcome.

This represents a deeper level of abstraction—one where users no longer interact with discrete applications to complete a task. Instead of selecting software and manually executing steps, the AI Computer assembles workflows on the fly, dynamically integrating available tools, models, and processes based on the user’s goal. The result is a system that isn’t just a platform for execution but an active problem-solver, capable of reasoning through complex tasks and adapting in real time.

Just as operating systems abstract hardware complexities, the AI Computer abstracts the complexities of working with AI. It manages memory, integrates tools, and orchestrates processes to ensure efficiency and accuracy.

LLMs have context limitations, meaning they can only process a limited amount of information at a time. The AI Computer controls data flow, selecting only the most relevant information for each process, ensuring context-aware execution.

It also connects to external tools, such as the internet or APIs, retrieving real-time, accurate data instead of relying solely on static knowledge. This prevents outdated or incorrect responses.

Crucially, the AI core doesn’t generate answers—it orchestrates. By distributing tasks across specialized modules and data sources, it avoids hallucinations, ensuring responses are grounded in fact rather than guesswork.

By managing memory, external data, and orchestration, the AI Computer removes the friction of working with AI, allowing users to focus on objectives without worrying about execution.

Building an AI Computer faces challenges similar to those encountered by early operating systems. Just as OS had to overcome hardware constraints and compatibility issues, this new computing paradigm brings its own set of limitations.

Software integration remains a critical hurdle. Today’s applications weren’t designed for AI-native interaction—while they may offer APIs, these interfaces were built for human users rather than AI orchestration. This legacy architecture limits the AI Computer’s ability to fully harness existing software capabilities.

The non-deterministic nature of AI introduces another layer of complexity. An AI Computer must implement guardrails to ensure consistent outputs despite the probabilistic behavior of language models. This challenge becomes more manageable when users control their own inference rather than relying on private models.

Memory management in an AI Computer mirrors traditional computing challenges but with new dimensions. The system must orchestrate different types of memory—from rapid-access context windows to extensive long-term storage—while ensuring AI components maintain appropriate context throughout their operations.

While training hundreds of models, developing intricate RAG systems, and building applied-AI solutions, I consistently encountered the same fundamental challenges. It wasn’t about training even bigger LLMs or illuminating its Chain of Thought, we needed a different approach.

Facing these challenges—and inspired by Andrej Karpathy’s vision of an LLM-powered OS—we created the KPU.

The KPU is Maisa’s AI Computer, a system designed to orchestrate components and execute tasks based on intent. Like a traditional OS, it abstracts AI’s core complexities—from factual responses and real-time data to context management and prompt engineering—creating a foundation for building reliable AI-powered systems.

The KPU keeps evolving as we push it into new territories. Each advancement shows us more clearly that embedding intelligence into computing systems is how we’ll make AI truly useful.

Author: Manuel Romero